Last summer, we were awarded a Knight Foundation Prototype Fund grant along with Prime Access Consulting and the Cooper Hewitt, Smithsonian Design Museum to complete a project that uses voice to engage people in the arts. Together, we’re creating an Alexa voice skill that will simulate exploring an exhibition at the museum in-person. This brings the museum experience to anyone with an Amazon Alexa device, enabling remote museum access for those who are unable to visit the museum for various reasons.

We used Scrum practices to kick off the project in early August, using the following process to refine the product vision and prioritize an initial backlog.

The goal of the project is to use technology to leverage the Cooper Hewitt’s extensive collection to reach and inspire audiences beyond the current physical gallery experience. The skill will be a “choose your own adventure”-style experience that allows users with an Amazon Alexa device to immersively explore parts of Cooper Hewitt’s galleries in their own homes and from their classrooms.

User Personas: Designing With the User in Mind

We gathered in the beautiful Cooper Hewitt building and got to work on the kickoff in one of their conference rooms. We were incredibly excited to get to work with Sina Bahram of Prime Access Consulting again. His profound knowledge of accessibility is a powerful asset to any team. To help set the scene a little further, Sina is blind. Therefore, inclusive design became a part of this project from its inception. It wasn’t simply a feature set that would become part of our backlog, but instead an innate part of both the project and the process. We used accessible design thinking in our agile practices themselves; we necessarily had to be intentional about the way we proceeded, ensuring every feature description was read out loud and fully discussed. Reading out everything we wrote on the board also led us to question everything in a manner that helped create succinct yet elaborate user stories.

The first exercise at the kickoff meeting was focused on determining who our users would be and how they would interact with the Alexa skill. We had to ask ourselves several questions:

- How familiar would the users be with Cooper Hewitt and its collections?

- What prior experience would they have with voice skills?

- What would users hope to find when they opened the skill?

Answering those questions helped us determine what features the skill might have, as well as giving us guidelines for ranking and prioritizing what we needed to build.

We needed to focus the first version of this skill on a narrow group to make sure we met the most important user needs, but still build it with enough flexibility that it would work for many types of visitors and situations.

The next question we had to ask was “what would provide the greatest value to our users?” The answer would define our MVP, or Most Valuable Product, as opposed to the more traditional Minimum Viable Product.

We started by stating what our initial limitations would be.

- The user would need to have an Alexa device.

- The user would need to be remote (not physically in the museum).

- The user would need to be an English speaker (we planned to save translations for a later iteration of the project).

With the basics established, we began defining the persona of the skill’s primary user, and what we might offer them.

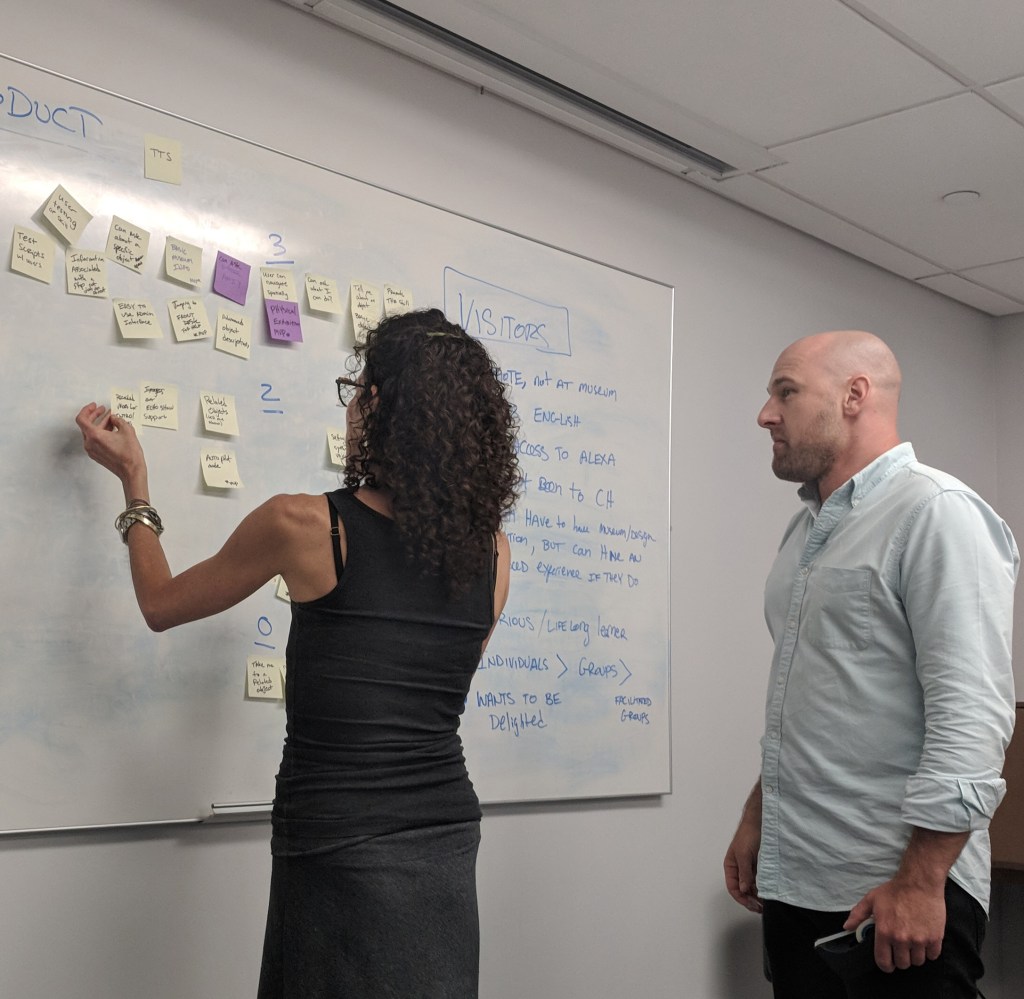

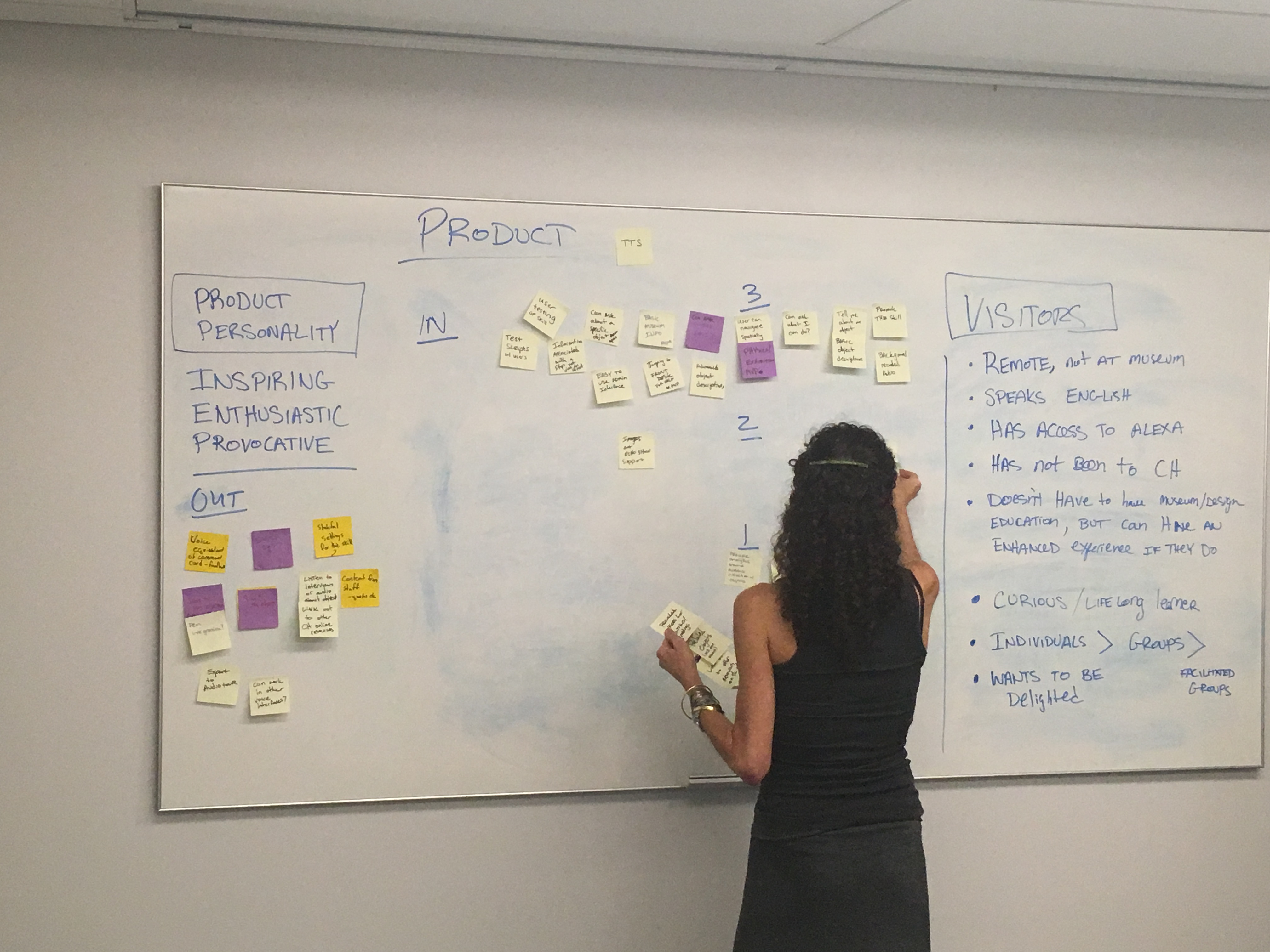

We jotted down every character trait that corresponded to types of people who might use the skill and laid them all out on our whiteboard. Next, stakeholders from each team took turns voting on the most relevant characteristics. The ideas with the most votes moved to the top, and we continued to restructure the board until we came up with a ranked list of traits for our user personas. Traits that did not make the MVP list were moved to the backlog to be saved for future iterations. After a couple of rounds of voting, we decided that the first persona would be someone with the following characteristics:

- Hasn’t been to the Cooper Hewitt.

- Doesn’t necessarily have a design background but is interested in Cooper Hewitt.

- Is a curious and lifelong learner.

- Is looking to be delighted.

These qualities immediately shaped how we wanted the application to perform. For example, if users are looking to be delighted, we’d prioritize making the voice skill an experience with an emphasis on interesting visual descriptions, rather than allowing the user access to the widest number of objects possible.

Product Persona: Putting the “Voice” in Voice Skill

When we enter into the design process, we consider factors that might be fairly small decisions on their own, but that together end up shaping the user experience into its final form. For a website, design decisions regarding branding, site navigation, careful font choices, and more culminate in an experience that we recognize as a brand “voice.” A challenge unique to developing a voice skill is that you have a literal voice, language usage, and personality to shape. In this particular case, we also wanted to use recorded audio from the museum and its staff that would add a layer of personality beyond the standard Alexa interface.

To do this well, we had to discuss what the personality of the skill itself would be. The key to making a voice app interesting is having a consistent personality that you can use to shape copy, tone, and other aspects of the speech interface.

We relied on the Cooper Hewitt team to lay out the essential aspects of their brand. They brainstormed what it might sound like if the app were just a regular person you’d meet in daily life. We wrote everything down on Post-its, stuck them on a whiteboard, and the Cooper Hewitt team took turns reordering the list. Soon, we started to see the same three traits showing up at the top of each list: inspiring, enthusiastic, and provocative (as in, thought-provoking). This was it! We now had the voice of Cooper Hewitt, and something we could code and craft consistent copy around.

Product Backlog: Adding a Dash of Agile

Once our user personas were defined and we understood the voice of Cooper Hewitt, our final task of the day was to create our product backlog. A product backlog is an evolving list of all the tasks that need to be done to add value to a project. After hundreds of kickoffs, we came prepared with a time-boxed agenda, Post-it notes, and stickers for dot-voting. This final exercise of the day was an excellent candidate for this set of collaboration and prioritization tools.

With 75 minutes on the timer and Post-it notes in hand, the team documented every possible feature we could think of, without worrying about timeline or budget constraints. People worked individually and brainstormed together all the ways a user could interact with the skill and the journey they might take, contributing ideas from multiple perspectives. Alley and PAC’s expertise in voice technology helped us bridge the gap between what we could imagine a user doing and the tools we could use to achieve these with voice.

Several rows of Post-its began to form on the whiteboard as the group worked. Alley’s facilitators began to cluster the Post-its into affinity groups on related themes. Finally, we went through several rounds of dot-voting to narrow the list down to a subset of MVP features. As in previous sessions, those features that did not make the final cut were moved further down the backlog. We started to see the skeleton of the project’s first iteration emerge.

Now we had a workable product backlog we could take back to our teams and flesh out into user stories and epics. We even had a semi-prioritized “blue sky” backlog we could come back to once we were ready to begin version 2.0. This would be updated with any data collected from the first iterations — things that we will test to see where our user base surprises us or departs from our assumptions.

As an emerging technology, voice has offered us many opportunities to push the boundaries of our development and Scrum practices. We’ve never taken a one-size-fits-all approach to voice-app development, and we have no interest in remaining static in our Scrum and development practices, either. We’re excited to see the ways that future iterations of this Cooper Hewitt voice skill project will broaden the museum experience to all users while also compelling us to adapt our practice areas. We are always learning and growing, and we’ll continue to post updates as this project continues!